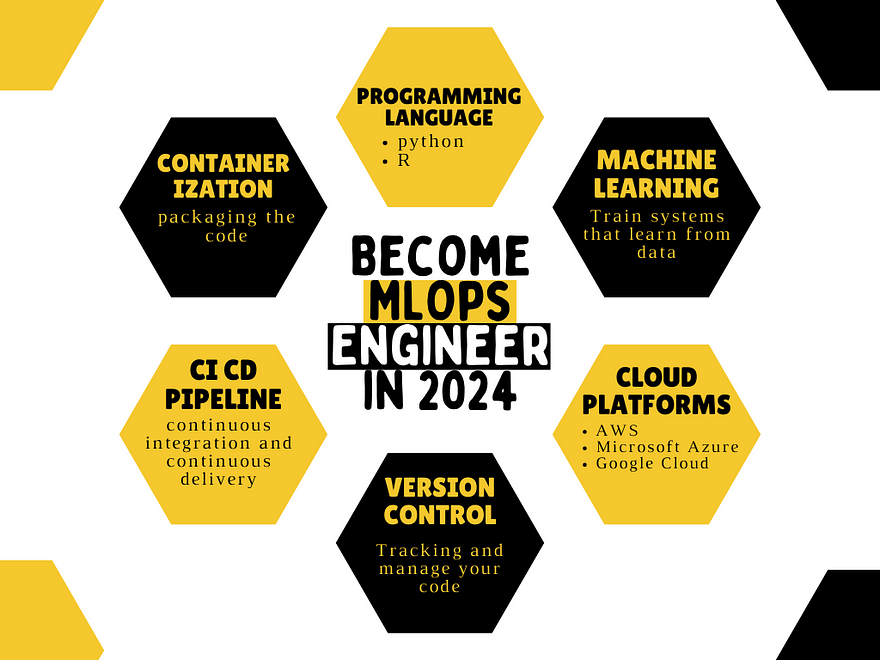

A Comprehensive MLOps roadmap to become MLOps engineer in 2024

Are you in the AI field and curious about machine learning? Ever wondered about MLOps? And How those cool AI applications get built?🧐

MLOps might sound like a mysterious term, but fear not! 🤔 In this MLOps roadmap, we’ll explore the mystery of MLOps together. 🌟

I can help you get started! I’ll give you a clear plan to follow, explain what MLOps is all about, and guide you step by step, demystifying MLOps and empowering you to become a skilled MLOps engineer. 💼

Let's start:

1- programming languages:

- Python

- R

You get to pick your weapon of choice: Python or R — both awesome languages that can turn you into an MLOps pro.🌟

Python for MLOps

- Big toolbox, bigger possibilities: Python boasts a massive library ecosystem, including MLOps favorites like TensorFlow, Kubeflow, and Airflow. This gives you tons of tools to tackle any MLOps challenge you throw at it.

- Easy to learn, easy to use: Python’s clear syntax and beginner-friendly nature make it a great choice for those just starting their MLOps journey. You’ll be up and running in no time!

- Plays well with others: Python integrates seamlessly with popular data science and software development tools, making it easy to connect your MLOps pipeline with other parts of your workflow.

Learn Python: https://www.geeksforgeeks.org/python-programming-language/

R for MLOps

- Data visualization champion: R shines in data exploration and visualization, letting you create clear and insightful dashboards to monitor your MLOps pipelines.

- Statistical powerhouse: R’s strength in statistics makes it perfect for tasks like model evaluation and performance analysis, crucial parts of any MLOps process.

- Active R community: The R community is passionate and helpful, providing plenty of support and resources if you get stuck on your MLOps adventure.

Learn R: https://www.tutorialspoint.com/r/index.htm

2- Machine learning:

Machine learning (ML) is the foundation of MLOps. It’s the magic that allows computers to learn from data and make predictions or decisions without explicit programming. But how does ML fit into MLOps? Let’s break it down:

Imagine MLOps as a well-oiled machine:

- Data is the fuel: You feed your MLOps pipeline with data, the raw material for training your ML models.

- Algorithms are the engine: ML algorithms are the heart of your models, learning patterns and insights from the data. Popular algorithms for MLOps include:

- Linear Regression: Great for predicting continuous values, like house prices or sales figures.

- Decision Trees: Make clear-cut decisions based on a series of questions about your data, perfect for tasks like spam filtering.

- Random Forests: Combine multiple decision trees for even more robust predictions.

- Support Vector Machines (SVMs): Excellent at classifying data into different categories, like identifying handwritten digits.

- Deep Learning: Inspired by the human brain, deep learning excels at complex tasks like image recognition and natural language processing.

- MLOps is the engineer: It automates the entire process, from training and deploying your ML models to monitoring their performance and making sure they stay up-to-date.

Learn Machine Learning Algorithms: https://www.tutorialspoint.com/machine_learning_with_python/index.htm

Types of Machine Learning: https://deepnexus.blogspot.com/2024/04/types-of-machine-learning-understanding.html?m=1

3- Cloud Platforms — Powering Up Your MLOps

When it comes to building and managing your MLOps pipelines, cloud platforms offer a game-changing advantage. Here’s why:

- Scalability on Demand: Cloud platforms provide elastic resources, allowing you to scale your MLOps infrastructure up or down based on your needs. No more worrying about running out of processing power for complex training jobs!

- Collaboration Made Easy: Cloud platforms facilitate seamless collaboration between data scientists, engineers, and MLOps professionals. Everyone can access and work on projects simultaneously, streamlining the development process.

- Automated Workflows: Cloud platforms excel at automating MLOps tasks, such as model training, deployment, and monitoring. This frees your team to focus on more strategic initiatives.

- Cost-Effectiveness: With cloud platforms, you only pay for the resources you use. This is a significant advantage over traditional on-premise infrastructure, which can be expensive to maintain.

Here are some popular cloud platforms that offer robust MLOps capabilities:

- Google Cloud Platform (GCP): GCP’s Vertex AI suite provides a comprehensive set of MLOps tools, including Vertex Pipelines for automation and Vertex Experiments for model comparison.

- Amazon Web Services (AWS): AWS offers SageMaker, a managed service for building, training, and deploying machine learning models. It integrates well with other AWS services for a holistic MLOps experience.

- Microsoft Azure: Azure Machine Learning provides a cloud-based environment for your entire MLOps lifecycle. It includes features for data preparation, model training, deployment, and monitoring.

Learn AWS: https://www.tutorialspoint.com/amazon_web_services/index.htm

Learn Google Cloud: https://cloud.google.com/docs/tutorials

Microsoft Azure: https://www.tutorialspoint.com/microsoft_azure/index.htm

4- Version Control: The Superhero of MLOps ♀️

Imagine building an amazing MLOps pipeline, but then a small change breaks everything! That’s where version control swoops in to save the day.

Version control is like a time machine for your MLOps projects. It keeps track of every change you make to your code, data, and models, allowing you to:

- Go back in time: Accidentally messed something up? No worries! Revert to a previous version that worked flawlessly.

- Collaborate smoothly: Multiple people working on the MLOps pipeline? Version control ensures everyone’s on the same page, avoiding conflicts and confusion.

- Experiment freely: Try out different approaches without fear. Version control lets you compare versions and see what works best.

- Reproduce results: Need to recreate a specific model or pipeline for future reference? Version control makes it easy to ensure everything’s exactly the same.

How Version Control Works in MLOps

Here’s a simplified breakdown:

- Store everything: Code, data, models — all the ingredients of your MLOps project are stored in a central repository.

- Track changes: Every modification you make is recorded, creating a clear history.

- Branch out: Need to experiment? Create a branch to test changes without affecting the main pipeline.

- Merge and deploy: Once you’re happy with a change, merge it back into the main branch and deploy your updated MLOps pipeline.

Popular Version Control Systems for MLOps:

- Git: The industry standard, Git offers powerful features for version control and collaboration.

- DVC (Data Version Control): Designed specifically for managing data science projects, DVC works seamlessly with Git for versioning your datasets and models.

- MLflow: An open-source platform for managing the ML lifecycle, including version control for models and experiments.

Version control is an essential tool for any MLOps practitioner. It ensures stability, collaboration, and the ability to experiment and learn. By embracing version control, you can build robust and reliable MLOps pipelines that deliver real value.

Learn Git: https://www.tutorialspoint.com/git/index.htm

Learn DVC: https://dvc.org/doc/use-cases/versioning-data-and-models/tutorial

Learn MLflow: https://mlflow.org/docs/latest/getting-started/index.html

5- CI CD Pipeline: Automating Machine Learning with CI/CD

Imagine building a factory that churns out cutting-edge AI models. That’s essentially what an MLOps pipeline does, but instead of physical machines, it uses software automation. Here’s where CI/CD (Continuous Integration and Continuous Delivery/Deployment) comes in as the secret sauce.

CI/CD in MLOps acts like a well-oiled assembly line:

- Version Control (Source Code): Everything starts with your code. Version control systems like Git keep track of changes, ensuring everyone’s on the same page.

- Continuous Integration (CI): A change in your code triggers the CI stage. Here, automated tests validate your code and data to catch errors early, preventing them from reaching later stages.

- Model Training & Validation: The pipeline trains your ML model on the prepared data. CI/CD then runs automated tests to ensure the model performs as expected. This is crucial for catching biases or performance issues before deployment.

- Packaging & Versioning: Once trained and validated, the model gets packaged into a format ready for deployment (think of it as neatly boxing your product). CI/CD also assigns a version number to keep track of changes.

- Continuous Delivery/Deployment (CD): The pipeline automatically deploys the packaged model to the target environment, whether it’s a testing server or production. Here, CI/CD can use different strategies:

- Continuous Delivery: Deploys the model to a staging environment for final testing before pushing it to production.

- Continuous Deployment: Automatically deploys the model directly to production (assuming all tests have passed earlier stages).

Benefits of CI/CD in MLOps:

- Faster Innovation: Automating the pipeline lets you iterate on your models quicker, constantly improving their performance.

- Reduced Errors: Automated testing catches bugs early, preventing them from impacting production models.

- Improved Consistency: CI/CD ensures your models are built, trained, and deployed the same way every time, leading to reliable results.

- Better Collaboration: The pipeline streamlines communication between data scientists, engineers, and operations teams.

By implementing a CI/CD pipeline in your MLOps workflow, you can build a robust and efficient system for delivering high-performing machine learning models!

Learn CI/CD:

1- https://www.guru99.com/ci-cd-pipeline.html

2- https://www.tutorialspoint.com/continuous_integration/index.htm

6- Containerization:

Imagine deploying your complex MLOps pipelines across different environments — developer laptops, testing servers, and finally, production! Sounds like a logistical nightmare, right? Well, that’s where containerization comes in as your MLOps superhero!

What exactly is containerization? Think of it like packing all your ML model’s needs — code, libraries, dependencies, and runtime environment — into a neat little box called a container. This container can then be shipped anywhere, and as long as the system has a container engine (like Docker), your model will run exactly the way you intended, no matter the environment. Here’s how containerization benefits MLOps:

- Reproducibility to the rescue! ♀️ Containers guarantee identical environments across all stages of your MLOps pipeline. This eliminates the dreaded “it works on my machine” problem, ensuring consistent model performance everywhere.

- Deployment becomes a breeze: Containers are lightweight and portable, making them super easy to deploy your models on different platforms, cloud or on-premise. Spin up new instances in seconds, ready to serve predictions!

- Isolation keeps things clean: Containers are isolated from each other and the host system. This prevents conflicts between different models or libraries, keeping your MLOps pipeline stable and secure.

- Scalability for the win! Need to handle more data or predictions? No problem! Simply add more containers to your pipeline. Containers are easily scaled up or down based on your needs.

Tools:

1. Docker: The OG of containerization. Docker is a user-friendly platform for building, sharing, and running containers. It’s a great choice for beginners or smaller MLOps projects.

2. Kubernetes: The container orchestration champion. Once you have a bunch of containers working together in your MLOps pipeline, Kubernetes helps you manage them all. It automates deployments, scaling, and healing, ensuring your containerized models run smoothly.

Learn Containerization with Docker: https://www.geeksforgeeks.org/containerization-using-docker/

Learn Containerization with Kubernetes: https://www.tutorialspoint.com/kubernetes/index.htm

0 Comments